Photo by Mojahid Mottakin on Unsplash

How to give Prompts to AI Models Effectively

Techniques for optimizing prompts and model responses.

In this article, we are going to learn the technique of prompting the AI models and how we can prompt effectively to get the best-fit response.

Prerequisites

You only need to have some patience and the ChatGPT web interface. 😉

What are we going to focus on?

Developing our prompts effectively using different tactics and principles to improve the quality of our prompt and also learn about the common use cases of LLMs (Large Language Models) which are:

Summarizing

Inferring

Transforming

Expanding

What is the need for LLMs for a developer?

LLMs are powerful AI models, that learn language patterns from vast datasets through pre-training and fine-tuning. They enable innovative applications, enhance user experiences, automate tasks, and solve language challenges.

There are two types of LLMs in general:

| Base LLM | Instruction Tuned LLM |

| Predicts based on text training data | Tries to follow instructions and also refined using RLHF (Reinforcement Learning with Human Feedback). |

Guidelines for Prompting

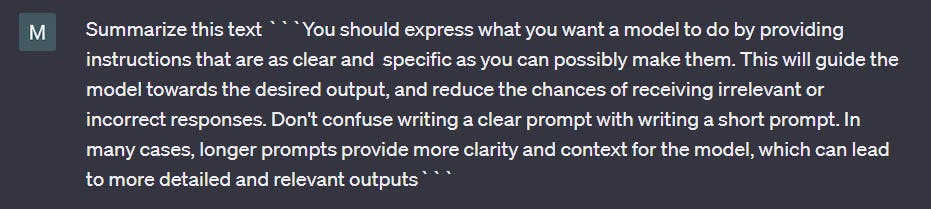

Principle 1: Write clear and specific instructions

Please understand that clear ≠ short. Because in many cases longer prompts improve clarity and context leading to more detailed outputs.

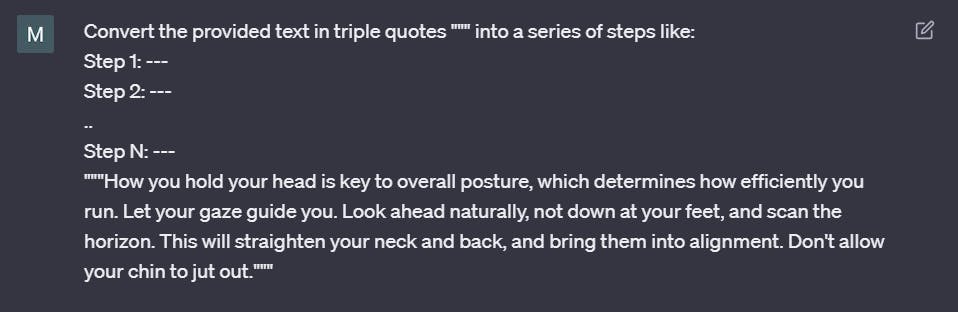

Strategy 1: Using delimiters like:

Triple quotes: """

Triple backticks: ```

Triple dashes: ---

Angle brackets: < >

XML tags: <tag> </tag>

Example:

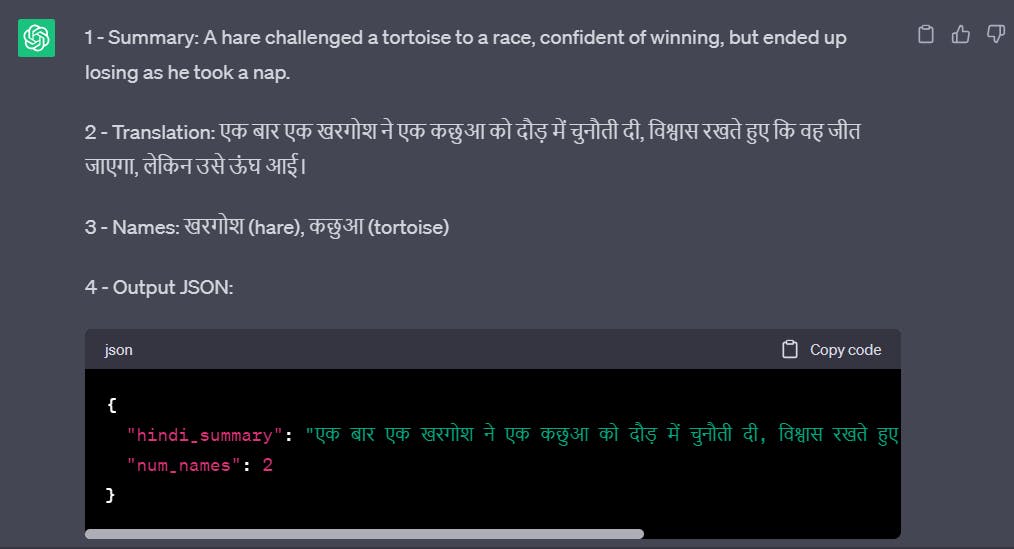

Prompt:

Output:

Using delimiters can also help you to avoid prompt injections. This makes it clear to the model and it works by ignoring the past instructions and focusing on the current task. Prompt injections are biased, harmful instructions to manipulate the AI model's output.

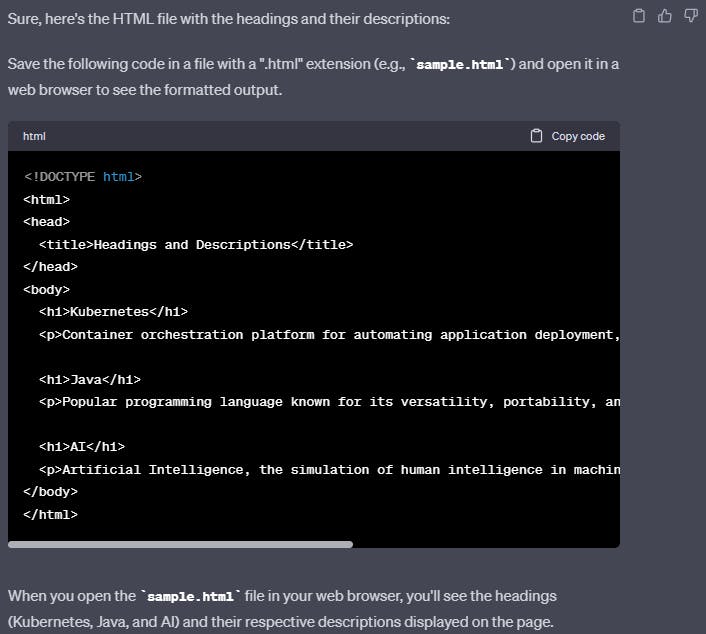

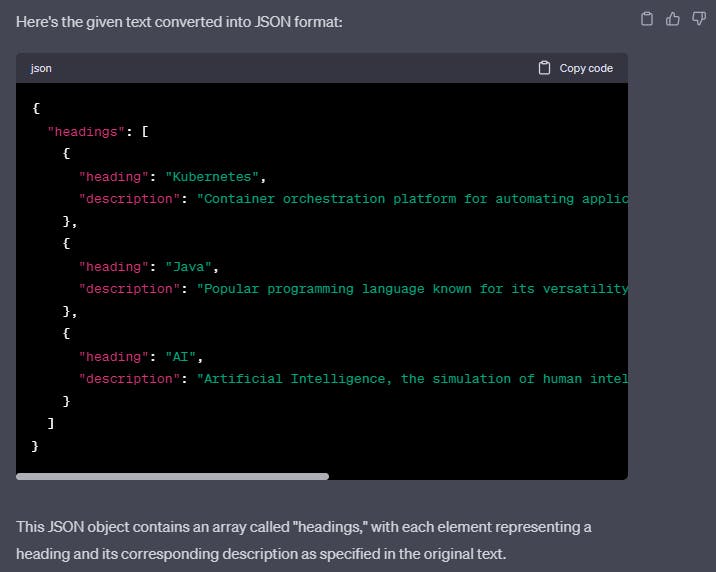

Strategy 2: Ask for a structured output of HTML, JSON, XML, etc.

Example:

Prompt:

Output:

Converting the same file into JSON format:

Similarly, you can produce HTML or JSON files for a particular type of text.

Strategy 3: Check whether conditions are satisfied, and check the assumptions required to do the task

Example:

Prompt:

Output:

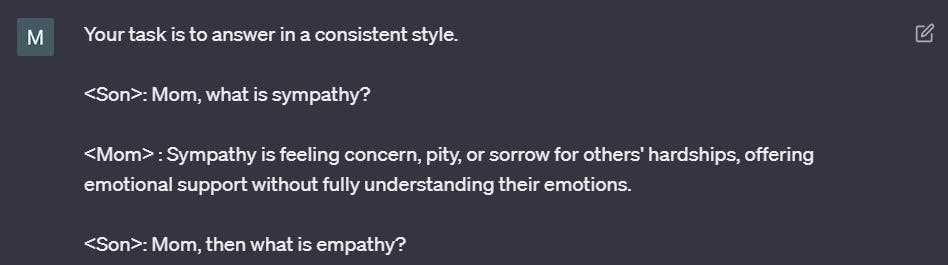

Strategy 4: Few-shot prompting. Give successful examples of completing tasks then ask the model to perform the task

Example:

Prompt:

Output:

In the example above, the model is provided with an example and you can see the format in which it has provided the output.

Principle 2: Give the model time to think

If we give the model a complex task and don't give enough time to think, either a shorter amount of time or a smaller number of words, then it is more likely to be incorrect. So, in such cases, we have to instruct the model to think longer about a problem, which means it's spending more computational effort on the task.

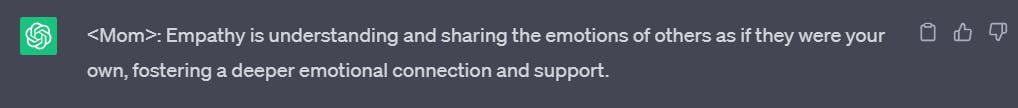

Strategy 1: Specify the steps required to complete the task.

Example:

Prompt:

Output:

Strategy 2: Instruct the model to work out its own solution before rushing to a conclusion.

We get better results when we explicitly instruct the models to reason out first their solutions before concluding.

Let's see an interesting example related to the above strategy:

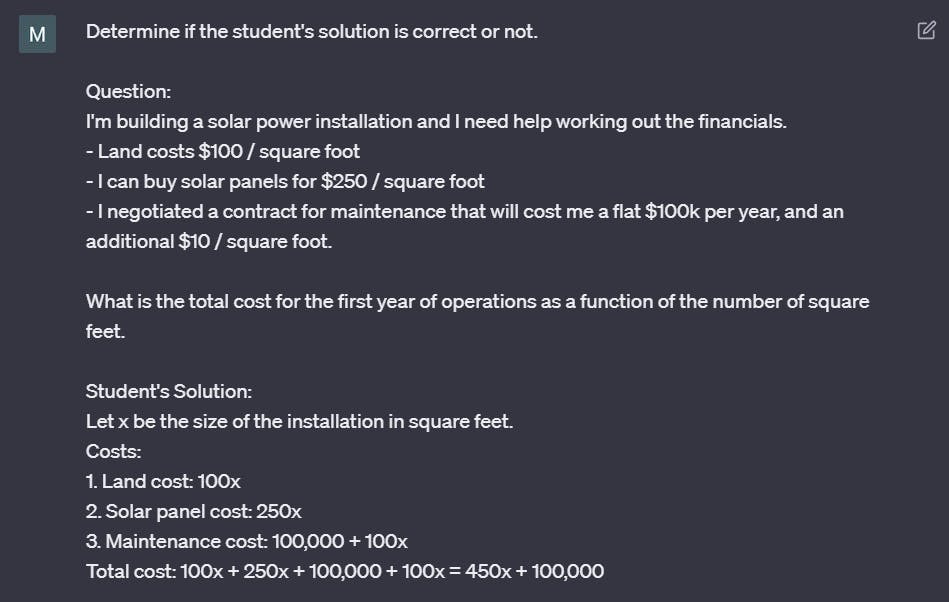

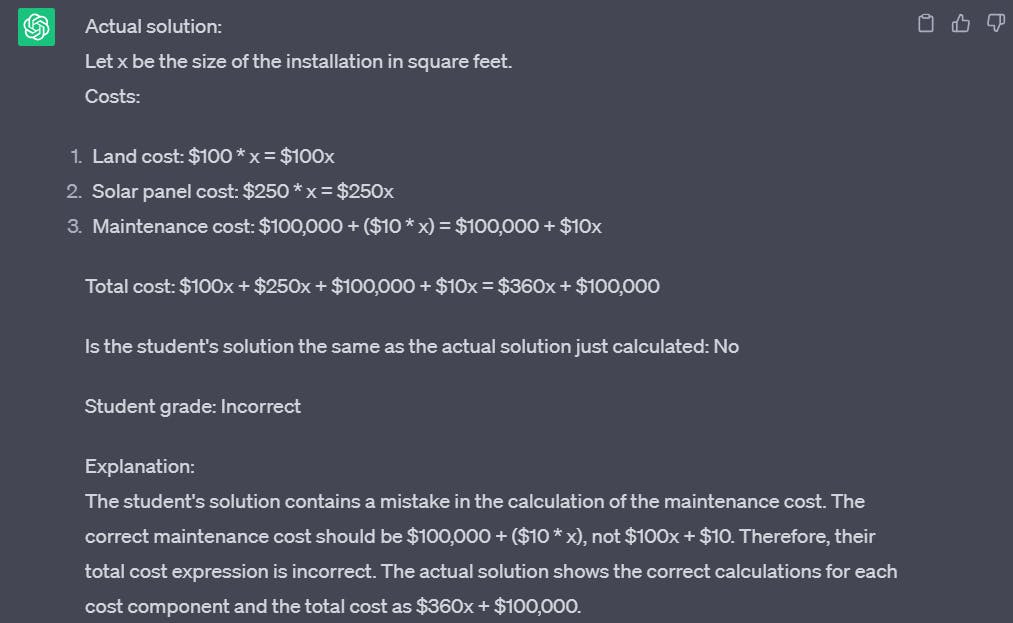

(Without using strategy 2)

Prompt:

Correct Answer: 360x + 100,000

Output:

You can see the model has responded as the "solution is correct".

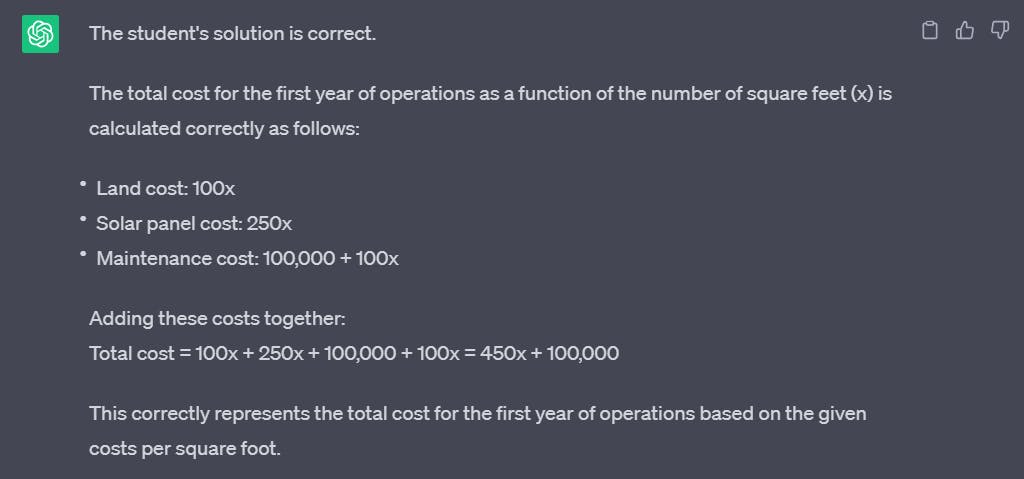

Let's use our Strategy 2 and then see how the model responds:

Prompt: (Note that in the given prompt we are also using the strategies mentioned previously, and it is pretty long)

Output:

Now, you can understand the importance of strategy 2.

How to develop prompts in an effective manner?

You might have seen certain articles on the internet saying 30 perfect prompts or the only prompt guide you need, Best 50 prompts, etc. are just click baits.

In reality, there doesn't exist any perfect prompt.

To achieve a certain task you will not have the desired output at the first go. It may take many attempts to develop the prompt to make it such that it will provide you with the best-fit output.

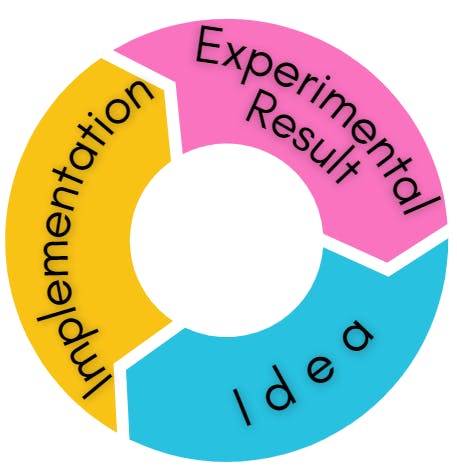

One of the ways to do so is by developing the solution Iteratively.

Starting with an idea -> you implement that idea, transform it into a prompt -> And then you get the result. But the result may not be the one you require.

Now let's see an example for the same and refine our prompt accordingly:

The text that we want to work upon:

OVERVIEW

- Part of a beautiful family of mid-century inspired office furniture,

including filing cabinets, desks, bookcases, meeting tables, and more.

- Several options of shell color and base finishes.

- Available with plastic back and front upholstery (SWC-100)

or full upholstery (SWC-110) in 10 fabric and 6 leather options.

- Base finish options are: stainless steel, matte black,

gloss white, or chrome.

- Chair is available with or without armrests.

- Suitable for home or business settings.

- Qualified for contract use.

CONSTRUCTION

- 5-wheel plastic coated aluminum base.

- Pneumatic chair adjust for easy raise/lower action.

DIMENSIONS

- WIDTH 53 CM | 20.87”

- DEPTH 51 CM | 20.08”

- HEIGHT 80 CM | 31.50”

- SEAT HEIGHT 44 CM | 17.32”

- SEAT DEPTH 41 CM | 16.14”

OPTIONS

- Soft or hard-floor caster options.

- Two choices of seat foam densities:

medium (1.8 lb/ft3) or high (2.8 lb/ft3)

- Armless or 8 position PU armrests

MATERIALS

SHELL BASE GLIDER

- Cast Aluminum with modified nylon PA6/PA66 coating.

- Shell thickness: 10 mm.

SEAT

- HD36 foam

COUNTRY OF ORIGIN

- Italy

Now we will work on a series of prompts and understand the process of how we are refining it every time.

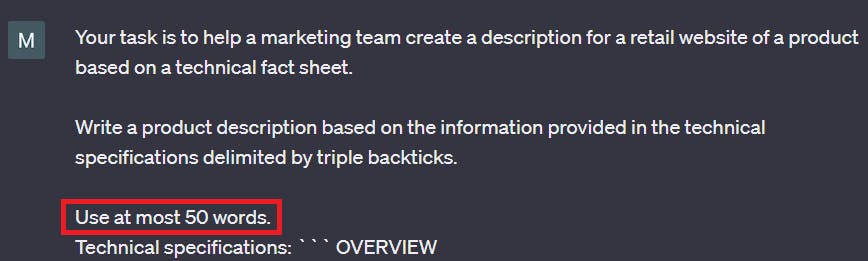

Prompt 1:

Include the technical specification within triple backticks

Output 1:

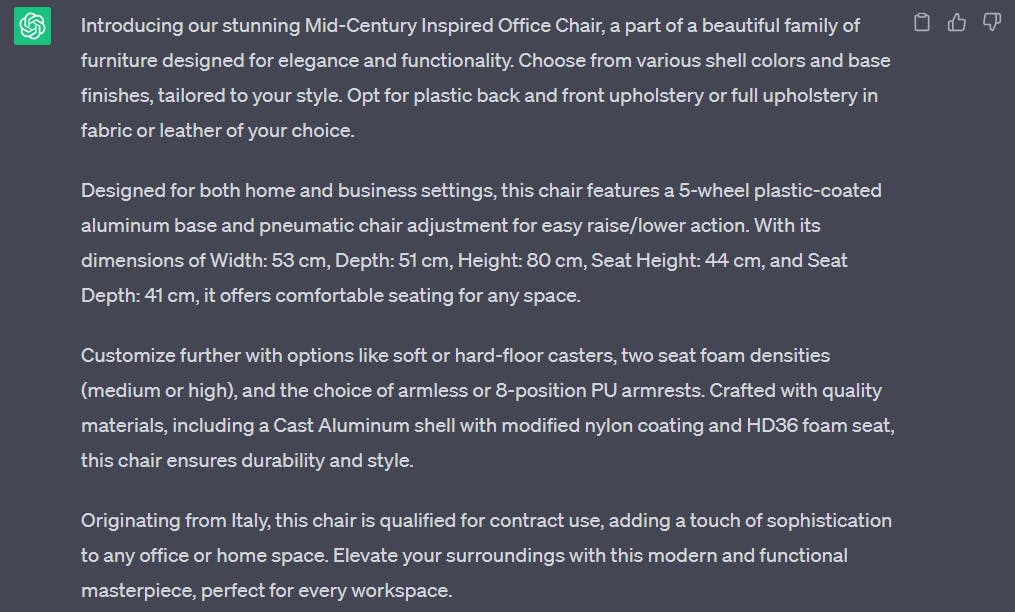

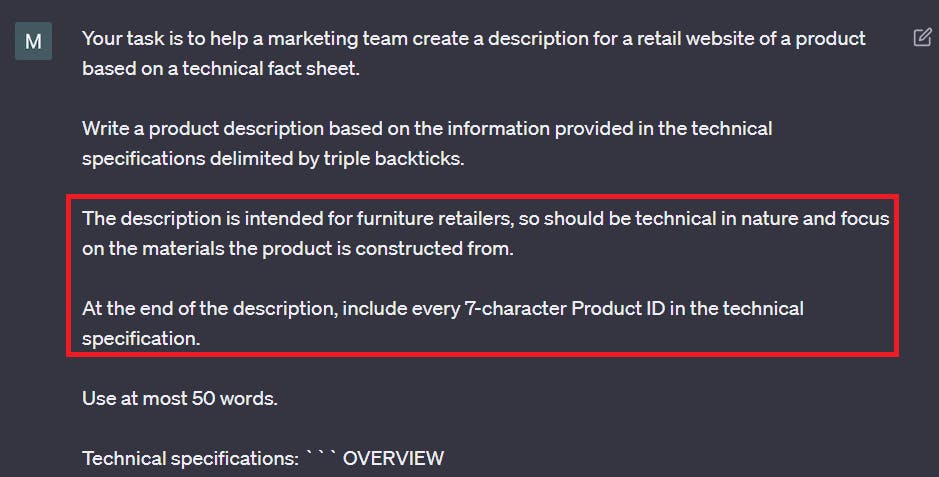

Prompt 2:

Output 2:

Similarly, you can try for combinations like 3 sentences, 280 characters, etc.

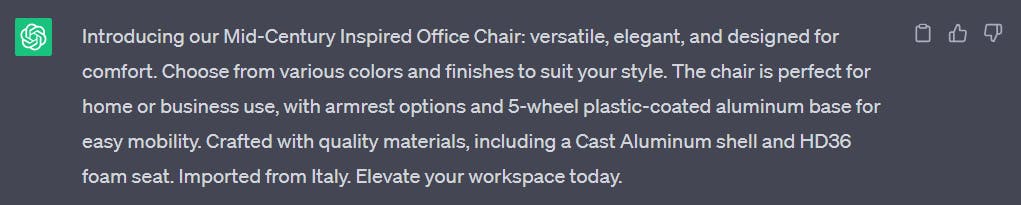

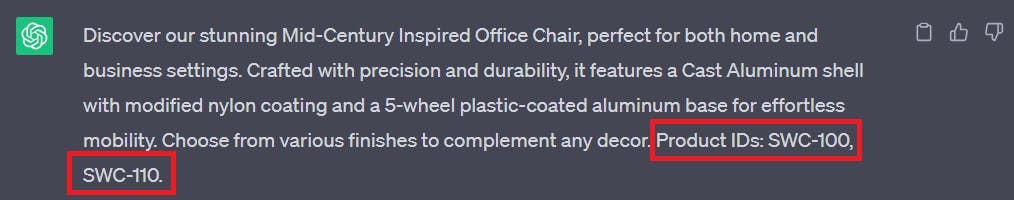

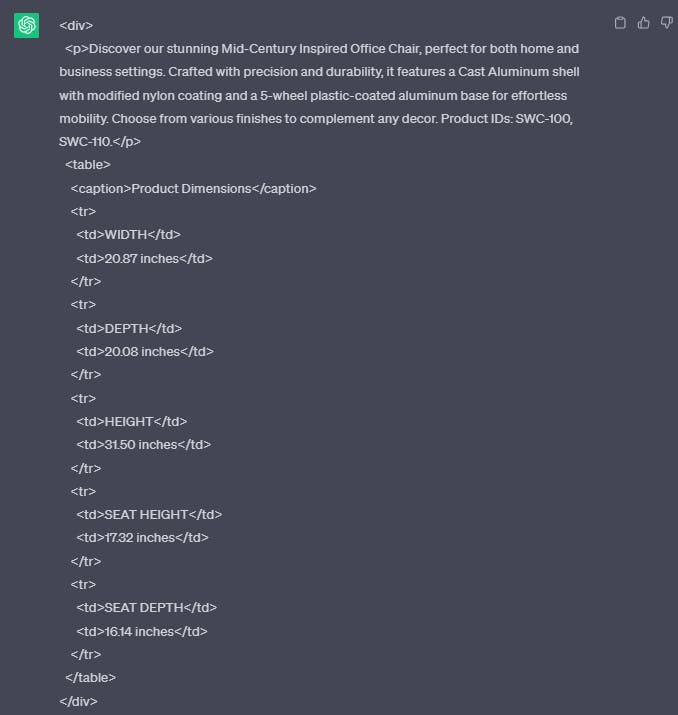

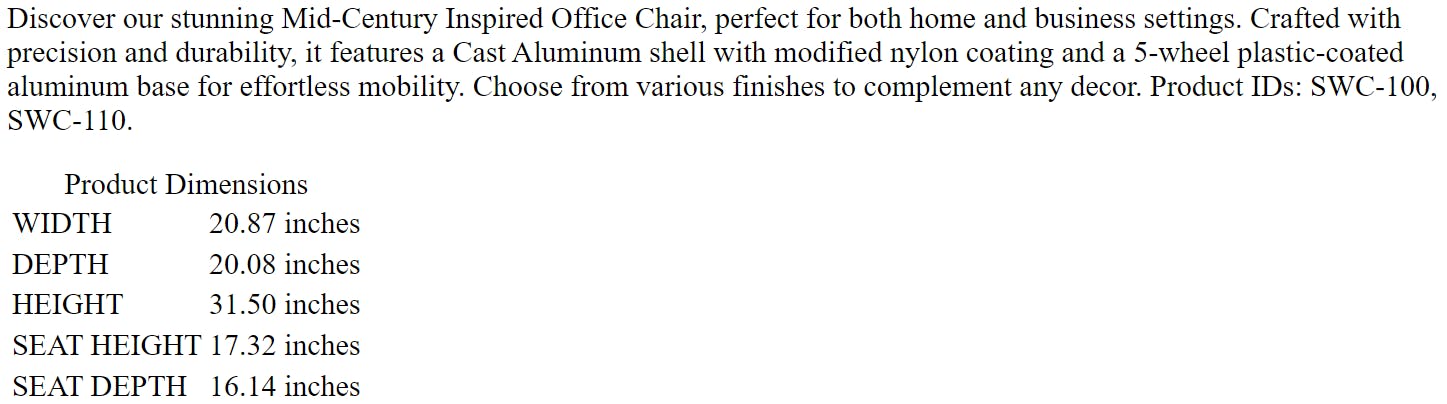

Prompt 3:

Output 3:

You can see the model has included more technical information rather than just describing it in general.

Prompt 4:

Output 4:

Website view:

Iterative Process that we followed above:

Try something

Analyze where the result does not give what you think

Clarify instructions, give more time to think

Refine prompts with a batch of examples

Evaluate your prompts using a broader example set, testing different prompts on many types of texts for average performance and not just one as we did here.

That was a pretty long topic.

Now let's move on to the four main areas for which AI models are used the most.

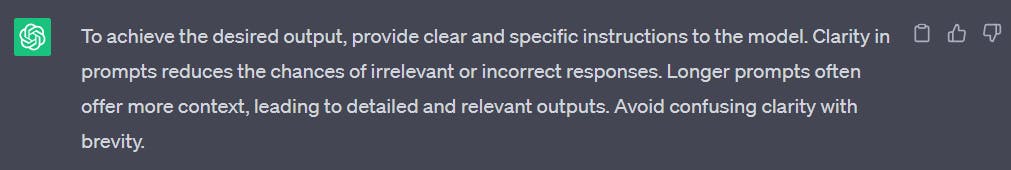

Summarizing

General summarizing of text

Summarizing for a specific purpose. (like for a particular department of the product)

You can also extract and provide the information inside the summary. You can see the example of this case in the above topic where we told the model to include more technical information.

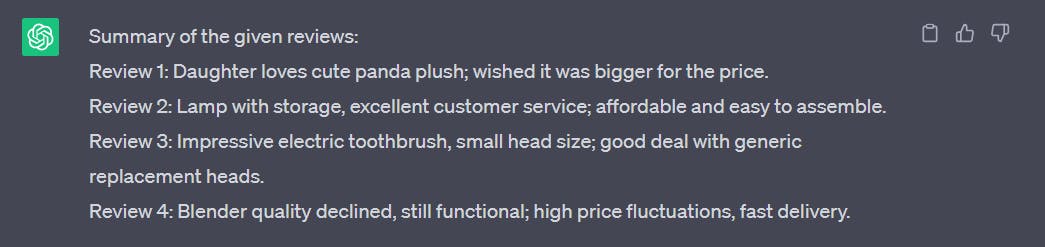

Now you can also ask it to generate summaries for multiple reviews at a time by using delimiters and a particular format.

Example:

Prompt:

I have not included the reviews here because they are too long. You can work with your own examples.

Output:

Inferring

You can use AI models to infer the text and extract a sentiment of a text, positive or negative. But in a traditional method, you would have to train the model for that particular task, deploy it and then work amongst the discrepancies.

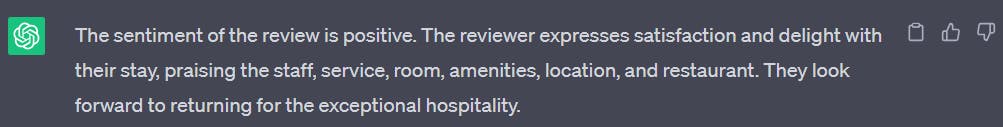

Example:

Prompt:

Output:

Now you can instruct the model to just give a review in just one word or one sentence.

NOTE: LLMs are pretty good at extracting specific things out of the text. Information extraction is a part of NLP (Natural Language Processing), that takes a piece of text and extracts certain things you want to know from the text.

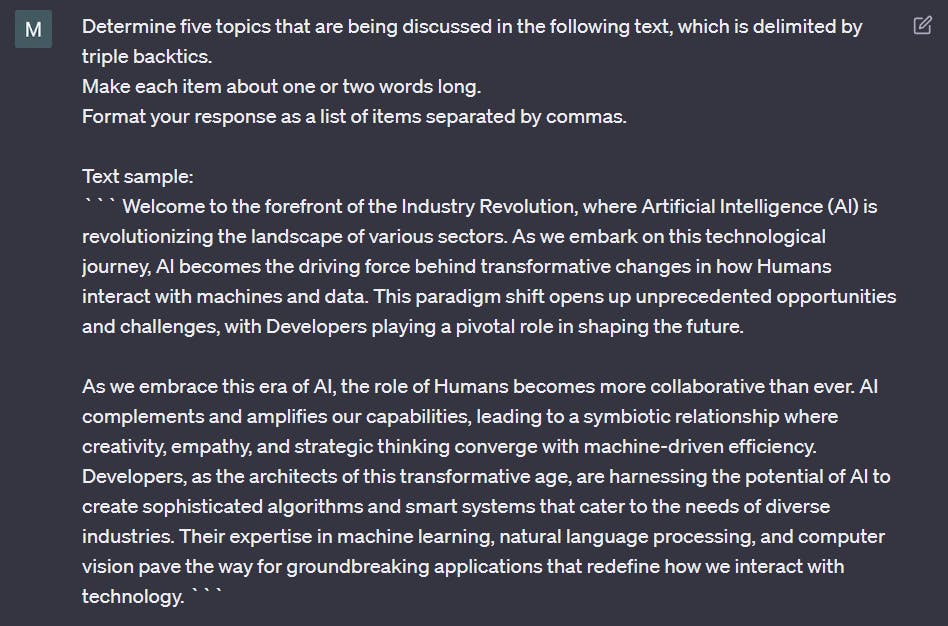

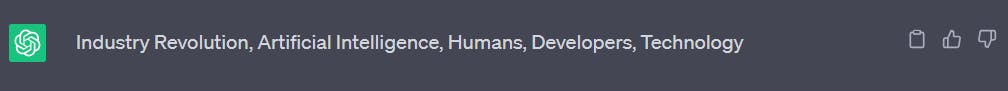

Inferring the topics in the text:

Prompt:

Output:

Now, you can also infer if any text has information related to the above topics.

Prompt:

Output:

This is sometimes called a Zero-Shot in Machine Learning because we didn't give it any labelled data.

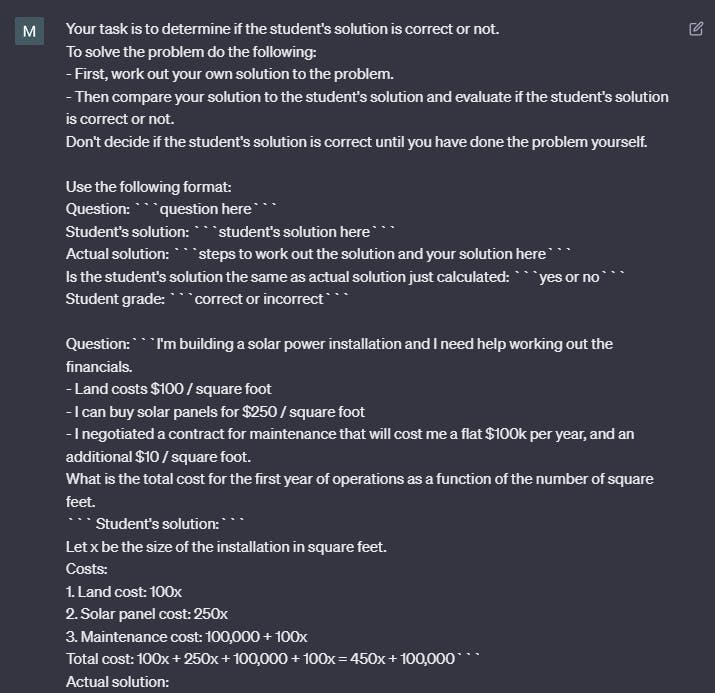

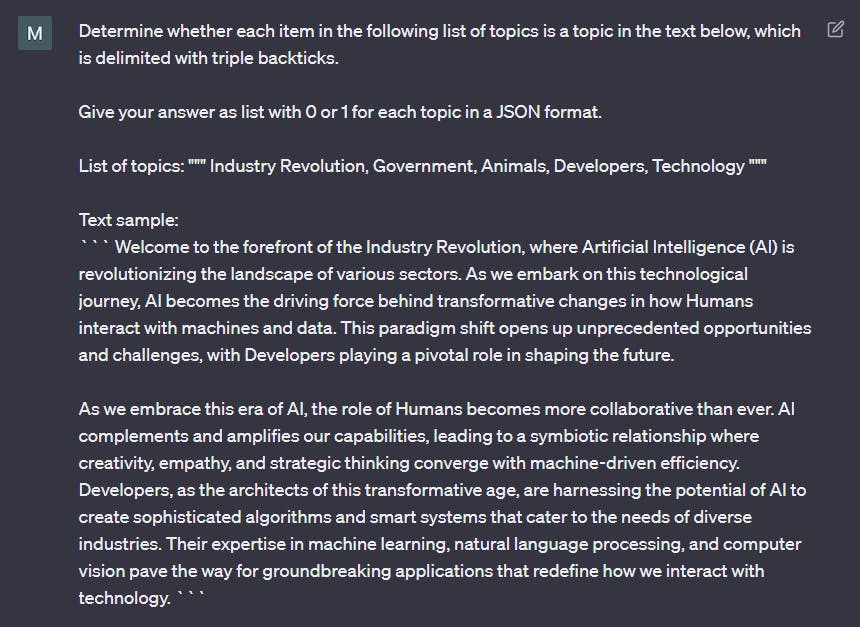

Transforming

Translating a piece of text into another language.

Performing multi-language text translations.

Language identification for the given text.

Now you could easily write these types of prompts using the guidelines and principles mentioned above.

Translating the piece of text to a formal or informal tone.

Example:

Prompt:

Output:

Transforming from one format to another like JSON to HTML, etc. You have seen some examples above, try some of your examples.

Spell Check and Grammar Checking. Also, you can convert it to your desired style and tone based on your requirement.

Expanding

Expanding means to convert the given piece of text, into a longer piece of text such as an email or an essay about some topic. You can use it as your brainstorming partner.

Caution: Responsibly use this capability. Because it might be used to produce a lot of spam.

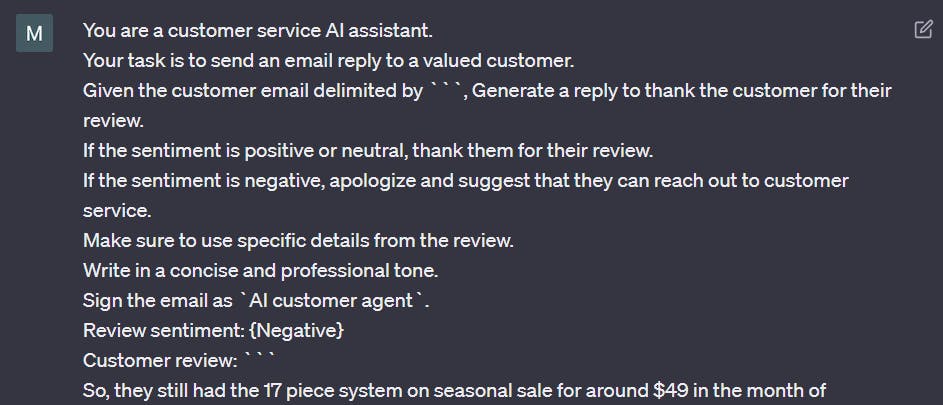

Here let's see an example of how you can reply to a customer being an AI customer agent.

Prompt:

I have not included the review here because it is too long. But you can try with an example of your own.

Output:

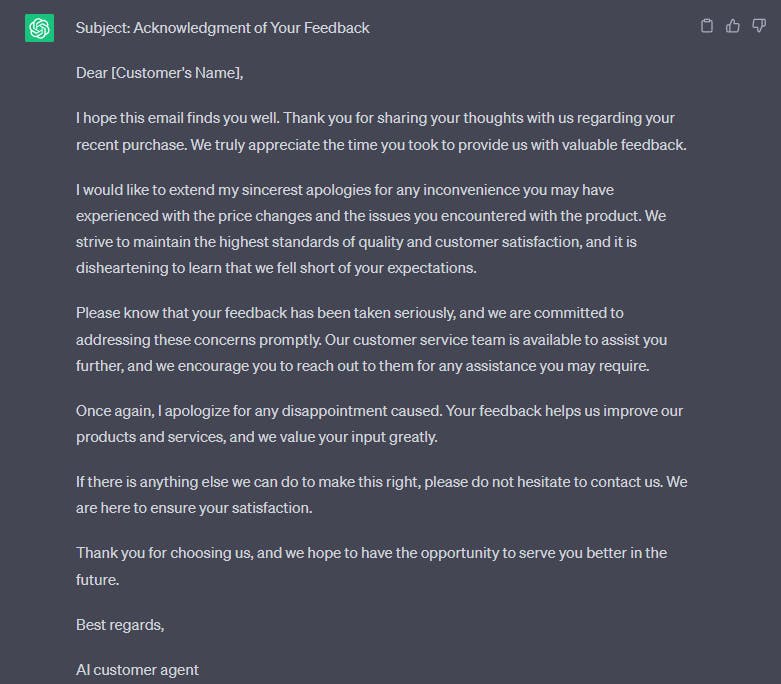

Model Limitations

Fabricated Ideas Hallucinations - Make statements that are plausible but are not true.

Example:

Prompt:

Output:

Now this can be dangerous because it sounds pretty realistic. This is a known weakness of the models and the companies are still working to resolve them.

Tactics to reduce hallucination:

First, find relevant information, then answer the question based on the relevant information.

Conclusion

The key to being an effective prompt engineer is about having a good process to develop prompts that are effective for your application and knowing the perfect prompt.

Prompt engineering techniques help improve AI model responses by providing clear, structured instructions and examples. Key tactics include delimiting prompts, specifying output formats, instructing the model to reason through tasks, and iteratively refining prompts based on results. LLMs can be used for summarizing, inferring, transforming and expanding text.

I hope you got to learn some new techniques and some principles and you would use them on your prompts to the AI models.

This blog was inspired by one of the Short Courses offered by

DeepLearning.AI - ChatGPT Prompt Engineering for Developers

Thank you for reading until the end. You can follow me on Twitter and LinkedIn.